Our brain tracks and evaluates the sounds that surround us. Without this “statistical learning” process, we would not be able to distinguish words from noise. Neuroscientist David McAlpine seeks to understand the neural principles of listening and to develop technologies for hearing-impaired people.

Hearing is critical for understanding the outside world. It helps us gain a sense of everything around us, which connects us to the environment and other people. We can learn the acoustic features of a room and the sounds it is reflecting. We also know, without seeing it, when a dog is barking outside. All this sound is essentially a mixture of vibrating air molecules, which hit our eardrums as one single wave. Our brain, however, has the capacity to disentangle those sounds and present us with a rich listening experience.

One way our listening brain does this is through statistical learning – learning the structure of the acoustical features. We can think of this as a kind of background learning. If you suddenly hear your name at a noisy cocktail party, you turn your head, right? Our brain is constantly listening to all the sounds that surround us, without us being aware of them. It builds up a pattern of what, where and how things are happening, and it even evaluates the need to have a conscious experience of specific incidents.

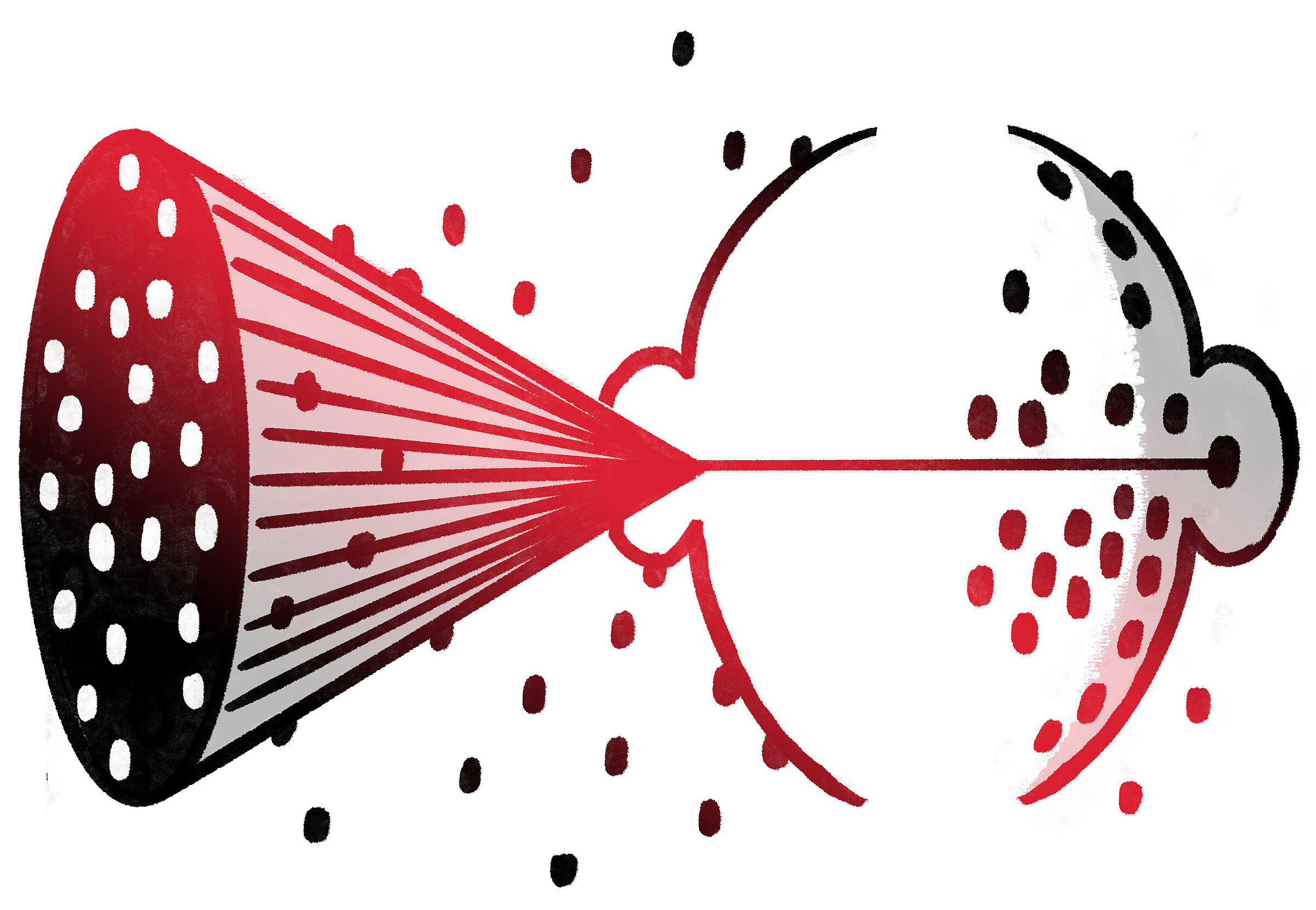

Listening happens in a constant loop. Information goes from the ear to the cortex – the upper part of the brain – and all the way back to the ear, modifying the inner ear performance to adapt to the vast array of information around us. If you are reading a book, your ears will change their level of sensitivity. I am trying to understand how such adaptations come about.

As a young scientist, I wanted to change the world. Then I turned to fundamental neuroscience. But periodically, I ask myself: What do I want to do to change the world? I call this “rediscovery science” – remembering the passion for understanding and changing the world that drove us to science in the first place. In London, I became the founding director of the Ear Institute at University College London, with a mission to understand hearing and combat deafness. Nine years later, I moved on to Macquarie University in Sydney. Here, hearing research, clinical work, companies, government hearing services and charities all come together. We sit down together every month, making decisions and finding ways to change the lives of hearing-impaired people.

Although I trained as a neuroscientist, I am very much driven by changing the lives of the hearing-impaired, including developing a new generation of therapies. When people lose their hearing, they also lose the ability to connect to their environment and it can be difficult to regain this ability. There is no machine or algorithm that can manage what the brain does naturally by accurately separating out different sources of sound. This means we still have not fully understood the process of listening and the listening brain.

In Berlin, I am collaborating with Livia de Hoz at the Charité. We want to find out how statistical learning emerges along the pathway from the ear to the cortex. We also want to understand how the cortex then influences our ability to cancel out the tidal wave of noise and to listen to speech during this process. New brain-imaging technologies have greatly advanced our understanding of the listening brain in humans and form a critical link back to animal studies. In Berlin, we will work with mice to explore brain mechanisms that underpin their ability to make decisions in different listening environments. Using optogenetic techniques by pulsing light to turn on and off neuronal activity, we hope to understand how the loop between the brain and inner ear is instructing the brain’s ability to learn from the listening environment.

Bringing this back to people with listening problems, those on the autistic spectrum, or with dyslexia also may have problems with statistical learning. When children are less communicative, it is often because they cannot cope with background noise and are not able to separate out individual speakers – this results in them not being able to understand what is going on. We want to help develop algorithms for devices that can do what the brain usually does – adapt to environments and present sounds that are intelligible. That would improve hearing-impaired or neurodiverse people’s ability to communicate with each other and to feel a bit more comfortable in our noisy world.